| Version 21 (modified by , 2 years ago) ( diff ) |

|---|

Documentation

Lost in inference?

Since November 2022 (not really long ago, is it?) you've chatted with ChatGPT, read articles on AI with prophecies from "we won't have to work anymore from next year on" to "we're doomed", have perhaps rendered a few funny pictures with Stable Diffusion or Midjourney and are now waiting until your local bakery or car mechanic claim to have their services based on AI now. Does that about describe your situation?

To get started with using LLM functionality in a real business context, a few issues must be solved:

- Accessing an LLM over the API and injecting a few-shot-training is normally a job for a software developer, no matter whether it is a cloud-based service like the ones by openAI or AlephAlpha, or one that runs locally.

- Creating the instructions to transform the input data as needed rather requires knowledge of the input and desired output data. This profile is one of a power user or technical writer.

- Few-shot trainings are written in the specific language of the AI model and thus, actually requires the combination of developer and power user profiles.

- Security keys of LLM services should not be widely distributed among users to avoid abuse.

- Few-shot training cycles are unhandy, consisting of writing cryptic JSON code, copying it elsewhere, running the software and trying again.

- A service might work well, but what happens with the data uploaded to it, and which data must not be uploaded?

|

|

A solution that you can start using tomorrow

djAI addresses all of these issues.

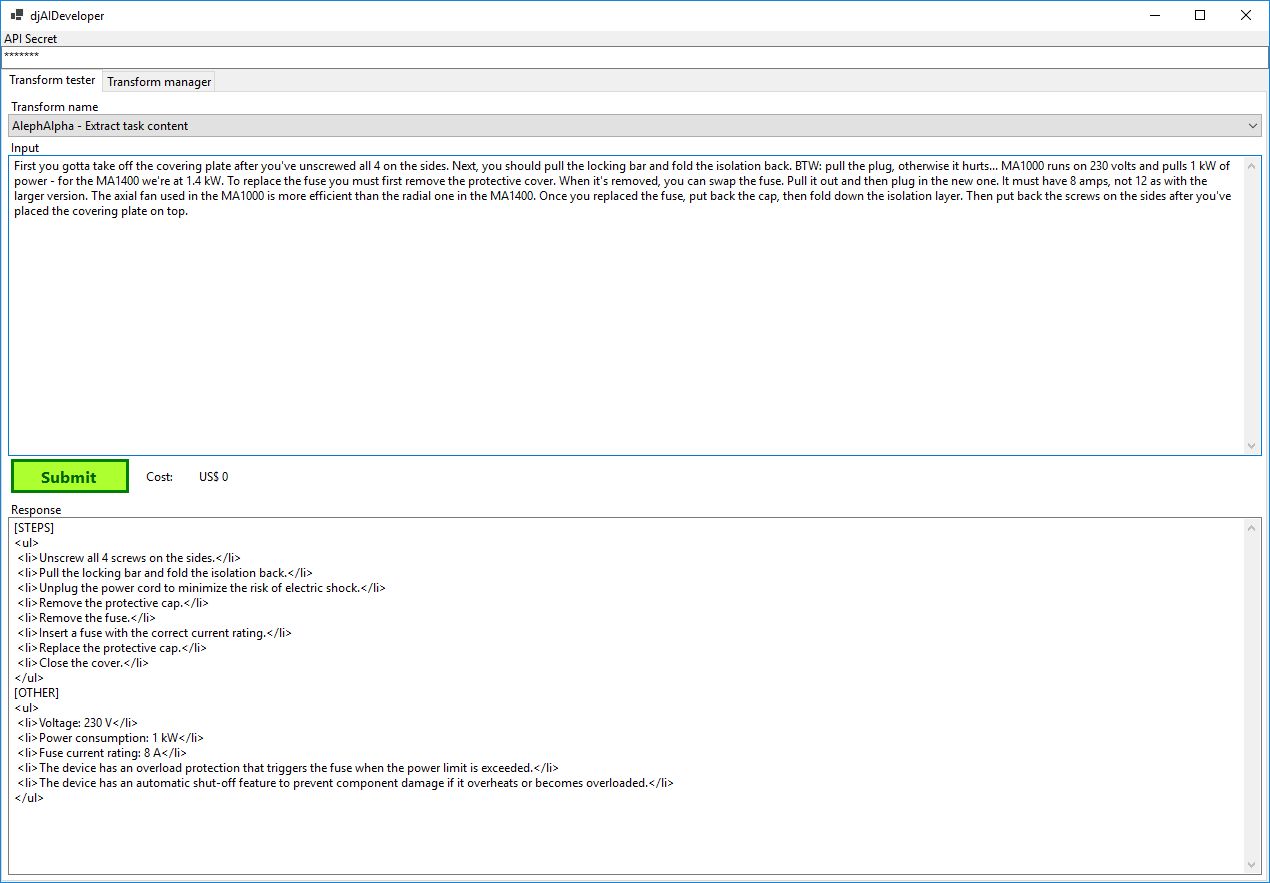

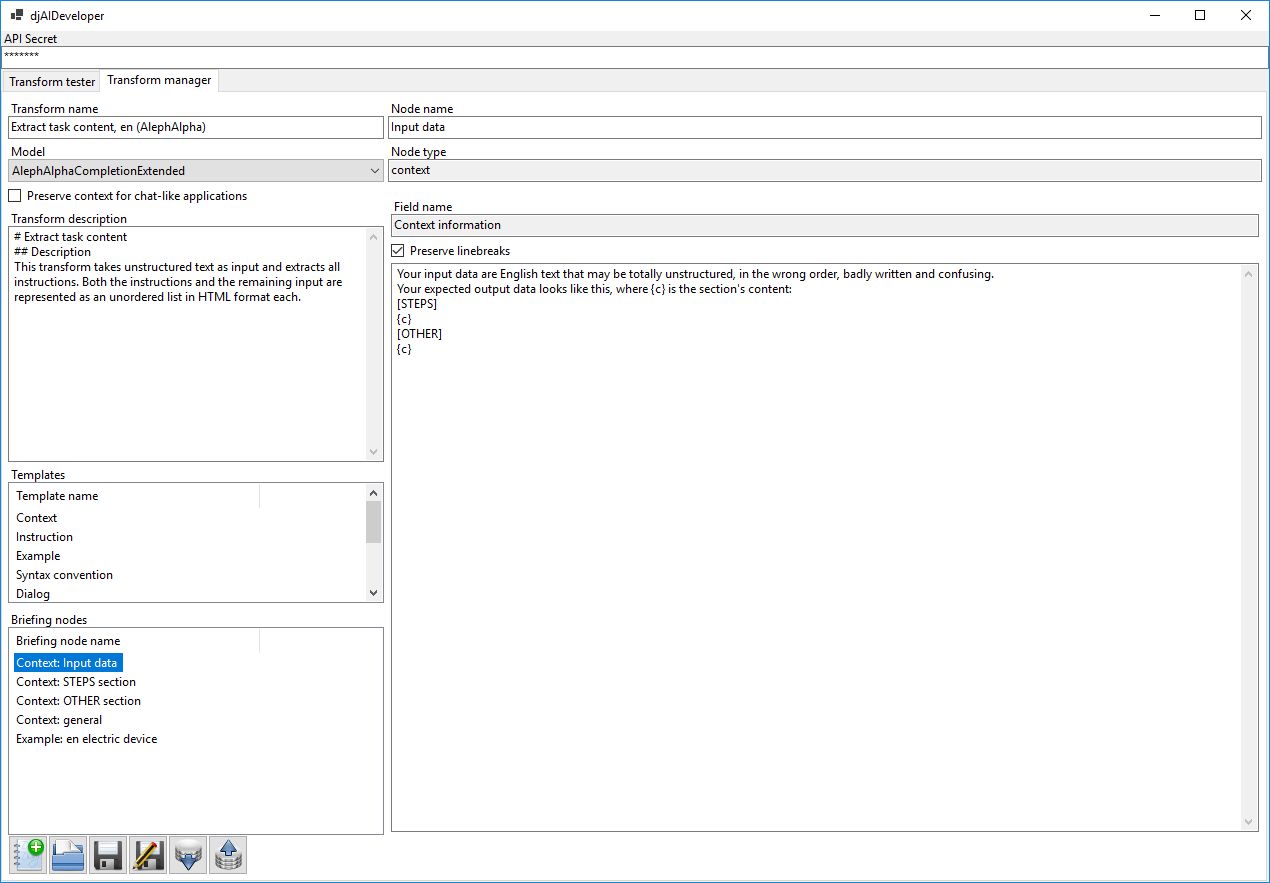

- It provides a graphical user interface that allows power users to create few-shot trainings per drag&drop and without any cryptic command language. Users with knowledge about the data and the desired operation can build it without any additional skills.

- Trainings can be stored as files and versioned as needed, for example in github or a DMS.

- The current status of a training in the editor can be immediately applied and tested. This shortens turnaround cycles and speeds up development. Realistic trainings with some examples that produce good results can be created in less than half a day.

- The training format is abstract and is translated to the specific LLM's syntax only when it's applied. This allows trying the same training on different models, or different versions of the same model. This way, it is also easy to determine which operations can run on simpler, cheaper versions of a model and which ones require more reasoning and linguistic capabilities.

- The interface supports single-request operations, chat operations (with context management) and single-request operations with dynamically generated trainings. This allows ontology, terminology or other knowledge injection into the request or automatic attachment of examples chosen by keywords in the source.

- The inference functionality with defined transforms (as called from the client software) can be easily integrated into your own software. Any environment able to send HTTP requests can access djAI server by passing a local secret, the source data and the transform name. The service responds with the AI query result.

- The AI request handling runs behind a web service. It is restricted to the functions defined by the training builders and is linked to a specific AI model such as

openAI GPT3.5orAlephAlpha luminous-extended. Regular users can't change this. This way, transforms handling data to be protected can use a service like AlephAlpha with servers in Germany for such tasks, and openAI models for tasks with less sensitive data, where they perform better or well enough for less money. - Plugins for GPT3.5 and GPT4 and AlephAlpha are included. Other plugins will follow as required and can be added by implementing a simple software interface.

The client is a .net 7 applications and runs on all recent Windows versions. HTTP access is possible from any environment (supporting HTTP requests).

The djAI server is a .net 7 Core application and runs on Windows and Linux. It does not need many resources, since it does not do the AI inference itself, but forwards the requests and trainings and returns the results. It can alternatively run on your desktop PC or as a server for many users in the network.

What can I use it for?

To start with, you can run the web service on your local PC. Then, you can use the client as a single-user LLM frontend. You can:

- Configure the AI models you want to use - if you have an account, you can use openAI and AlephAlpha, and there are some open models with limited API access.

- Create few-shot trainings for the typical data transform applications you have. Then, you can just convert data using the predefined transform as you need it (as shown in the screenshots above).

- Compose multiple, small functions to more complex data transforms. For example, you could first extract some metadata including the language. Then, you could use a transform as the one shown above to extract the instructions - with examples in the correct language determined before. Finally, you could convert the list of instructions to a DITA task topic. Calling the functions from your own software is as easy as sending an HTTP POST request in your favorite environment.

When you start writing software with our djAI integration platform, you will probably start exposing the service to your colleagues in your network and run it on a server. Then, users in the network with credentials can use the AI models configured, but only with the pre-defined transforms. Only admin-level users may create, modify or delete training data.

In the process described above, you could a training for each language with examples in that language. Alternatively, you could compose the training for the specific request by software and run the training with the data - admin accounts can do that, and it allows combining structured data sources like ontology databases, termbases or data in a CCMS with AI reasoning.

In the example described above, the system could generate data by searching a similar DITA task in the CCMS, converting it to HTML, using the ordered list thus created and using the mapping from HTML to DITA as training example. Particularly AlephAlpha in the larger versions is very good at creating high-quality results with only one or two good examples.

What happens with my data?

Many people associate LLM 1:1 with openAI and ChatGPT. While even GPT3.5 yields very good results in such transforms as explained above, there is the question whether the data is used for training (this can be opted out), but also where the servers are and which laws apply. From an EU legal perspective, handing personal data to openAI is a no-go, and trusting intellectual property data to it might cause headache.

On the other hand, the model is large and has a very good reasoning. Our experiments with open models including Raven 14B and RedPajama 7B showed evidence that these models are very restricted compared to GPT3.5, because they are much smaller. There are larger models, like Bloom, but they require significant (and thus expensive) hardware.

We found the German company AlephAlpha and their AI model called Luminous to provide a solution for these issues. They offer servers based in Germany and thus under EU law, and running on premise is also possible. The reasoning of at least the extended version of the model is very good, and the tests showed no notable drawbacks compared with openAI. It seems to be very good at learning patterns from sparse examples, but it should be, because compared to openAI with models allowing token lengths up to 32k, it is quite restricted with 2k, which is half of the 4k of basic GPT3.5. Still, some meaningful trainings worked right away with Luminous, and it can be used for commercial applications with sensitive data without restriction.

Anthropic Claude, available on AWS Bedrock, is powerful and supports very large context lengths up to 100k tokens. That makes it the preferred model for integration with external data sources, allowing large pools of information to be injected to the prompt.

For the time being, djAI supports GPT and AlephAlpha, but supporting other models is easily done by writing a plugin class that fulfills a simple interface. Apart from sending HTTP requests to the model, the main task is, translating the abstract training syntax to the model's native syntax.

Feature matrix and license levels

djAI is available in four different license levels:

Personal

You can download the server and client djAI software for free. There is no functional limitation compared with the payed licenses, apart from the limitation of a single user key with full privileges and none with limited privileges. However, the following rules and restrictions apply:

- You may NOT use the personal edition for any kind of organization, and even individuals may not use it for the purpose of creating a revenue or as part of any commercial services or products.

- You may NOT share the same server with multiple users by sharing the same key, even though it is technically possible.

- You may use the personal edition without any further limitation for individual, non-commercial applications.

- You may use the personal edition even in a commercial context and / or for an organizition for development or testing of transforms.

- You may, for convenience, run the server on a different machine than your desktop PC, for example, an office server running Linux or Windows. However, the restriction still applies that only one user may use it.

Commercial

The commercial editions (team, professional and enterprise) can be used for commercial applications and by organizations. They only differ in the number of different accounts and / or sites they permit.

- Team edition: 1 instance, 1 briefing author, 10 users

- Professional edition: 1 instance, 5 briefing authors, 500 users

- Enterprise edition: unlimited instances, briefing authors and users