Machine talk

A white paper about software development in the AI age

Introduction

Software Development, the skill, as we knew it so far is the ability to understand a problem, find a solution concept for it and break it down into instructions for a computer that enable the computer to solve the problem. Describing the problem to the computer doesn't help anything, nor giving the computer a few examples how similar problems were solved in the past and ask it to find an adapted solution by itself.

Scientists have been reseaching for decades, and (quite invisibly to the public) many AI-based solutions for specific problem classes were developed. For example, AI-based support in evaluation of medical scan data, finding items on a conveyor belt that don't meet the quality standards or simple chatbots that basically repeat what's in the FAQ if they find a certain keyword in what the user typed.

We're beyond that now, AI services and software products available now for Natural Language Processing (NLP), image creation or interpretation, speech-to-text or more specialized tasks like creation of music have reached a level where they can be universally applied to virtually all kinds of tasks within their functionality. So, while chatbots from two years ago can be compared to software specialized in removing red eyes from photographs, today's AI performance allows bots that can rather be compared with a universal image processing software such as GIMP or Adobe PhotoShop.

Such AI services, the most prominent of them is probably the NLP AI ChatGPT (where GPT stands for Generative Pre-trained Transformer) can not only be used through the web interface, but basically the same functionality is exposed over an Application Programming Interface (API), allowing developers to integrate AI reasoning into their own software products. I've written a white paper recently, Chat for one, about some the various things I did with ChatGPT in the past months. This paper is about some of the experiences when using the programming interface.

So how do you write an AI program?

You've probably heard the term Prompt Engineering and perhaps you've read in the media that Silicon Valley is craving for Prompt Engineers and offers them salaries in the mid-six-digit US$ range. I don't know whether the latter is true, but I think a Prompt Engineer deserves about the same salary as a Software Developer or Architect, depending on the exact job description. Prompt Engineering is not magic or some sort of enhanced empathy with machines, it is a form of Software Development. What Prompt Engineers do is: they write instructions for computers to solve problems. That's about valid for the daily business of traditional developers.

The difference is rather in the way the machine is instructed.

Concepts of classical programming languages differ, and there are some exotic ones like Prolog that move away from the common pattern, but basically, a classical program consists of small elements (commands or instructions) that step by step do small operations on the data that bring it closer to a problem solution.

AIs are not instructed this way. An NLP AI brings along the ability to "understand" the text (or better: to behave as if it did). Therefore, we do not have to write lengthy software code to tell it how to (for example) find all the nouns in an input text in all possible languages and replace them with the correct terms. We must just provide the correct terms and how to find the wrong terms. A good way to teach such things are by a combination of instructions and examples, and also examples how not to do it. The interesting thing is: if we teach an AI things based on an English example, and if the same thing makes sense in German as well, the AI is likely to be able to do, in spite it's never been trained with German text. So, the AI can do all the basic lingual processing and also powerful reasoning, we must "just" provide data it doesn't have by default and information on what we expect it to do with the user's input data.

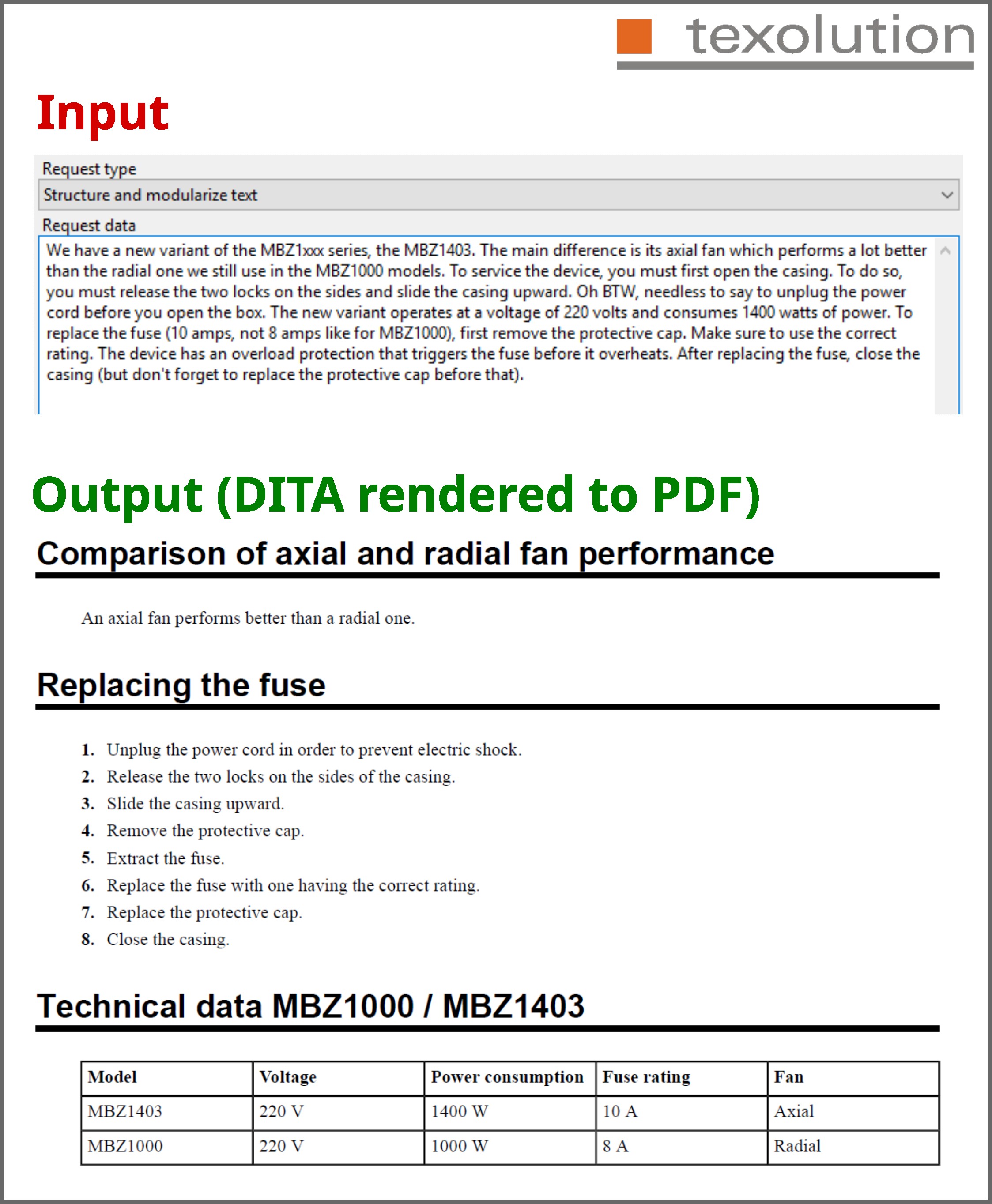

The AI context in this image has mainly been trained with German data, and had just a short training example in English. The DITA training was only one example per topic type (admittedly, yet without any of the advanced markup - we'll add hazarstatements, examples and preconditions later):

In ChatGPT, training data can be uploaded as a JSON file, which can be understood as the "AI program". The development process itself is, again, closer to the traditional way - you change the training data, test, implement improvements and test again.

One-shot versus dialog applications

Evidently, the example shown is of one-shot type. A user converts data of type A into type B, and then perhaps does so again with a new set of data, and again. But each operation starts with the same set of training data and it is not expected that the system learns from subsequent user input.

But why not? We could implement a one-shot problem as a dialog, so that the application can learn and improve its reasoning. But this would require some sort of feedback mechanism, either by the user or by another AI instance that validates the previous result and returns feedback if it needs to be improved. Without a feedback mechanism, dialog-type reasoning should rather be avoided, because the subsequent user data will change the AI's behaviour, but not necessarily to the better. The AI model could start producing wrong output and cultivate this, because it is never corrected. Tokens, the units that are charged for when using the API, are quite cheap (fractions of a Cent for a typical transform), so it's not worth risking data quality to reduce training data upload volume. But with consistent and specific feedback, this can be a way to increase quality.

This approach, however, reveals a weakness of the GPT API: it does not have a sophisticated session management with precise control on how context are stored. There is a way to pick up a dialog context (which is required for dialog applications like chatbots), but there is no reliable way to influence the lifetime of a context, or even, to be informed that a context has expired. We haven't tested this very well yet, but a mechanism to cope with this could be to train the AI to return a certain string (like "OUTPUT") at the beginning of each response. If "OUTPUT" is missing, then this could show the AI has forgotten the training and silently set up a new context.

If openAI granted me a wish, I'd say, I'd like to have an API method to create a context, and I can give it a lifetime of two hours, or one month, or infinity. Another method would kill a context. The method to create a chat response can either be called without a context (then it returns one response and forgets the temporary context after wards), or with a context, then it can access all that happened before. Nice to have: clone a context to train one with complex data and then create a clone for each request (and then kill the clone request).

I am aware that this would cause cost in form of resources used by saved contexts, but openAI could charge for it and let the account owner decide what to keep and for how long.

This would improve both dialog and one-shot applications:

- For one-shot applications, it would enable uploading very large training data once and forever reuse it without uploading it again. The clone function would keep the training data constant over time.

- Dialog applications could be saved, so that a website user who returns the next day could chat with the bot and continue where the dialog ended. Also, it would allow to keep specialized contexts with large training data in the back for a chatbot to ask when rather specific questions occur.

For the time being, both is somehow less than it could be (even though I understand the conceptual reasons for the limitations).

Modular functionality

Just like in classical, algorithmic development, it is not a good design having all steps of data manipulation covered in one huge training data set. The tests showed that tasks should rather be broken down into smaller, clearly defined steps with one optimization goal (like, for example, bringing confused instructions into the correct order. These modular functions can then be glued together by a regular program that passes the data from module to module and perhaps does some algorith-based processing in between. This also helps making the AI operations more reusable.

While writing huge methods in classical Software Development is bad style and hard to maintain, it may function exactly as well as a clean and modular application. This is unlikely for monolithic AI tasks with many things to do at the same time (like structuring text, adding warnings and translating it to French). Each of the things the AI should do is, in simplified mathematical terms, a local maximum in a multidimensional function. If the AI has a clear goal in a step, then there is only one maximum. This makes the optimization problem easier for the AI to solve.

Usage cost economy

openAI charge a fraction of a Cent for 1,000 tokens transmitted (request plus response). A token is one to few characters in length. The transform shown in the screenshot costs roughly half a Cent, including the upload of the training data. Unless we want to apply the same rule set on one million of input data sets, we shouldn't worry too much about the transaction cost. On the other hand, reducing the size of training data does not necessarily mean thinning it out in a density sense, but rather, writing the instructions as sentences as short as possible. Short sentences are often clear sentences, so this way of reducing size can rather increase quality.

Plus, since the models are limited in token length (for example, GPT3.5 turbo to 4k tokens per dialog step (user input, GPT response), this can free space for more examples etc., increasing the transform quality further.

Only in the case of very many transforms with the same rules, we could use the dialog approach described before to upload the training data only when the context has collapsed. Otherwise, we can safely ignore it.

Protection of intellectual property

A conventional program will never by itself reveal how it works. To learn about how it works, one would have to reverse engineer it. Nor has a conventional program any form of (even simulated) understanding of its own functionality - it just executes it step by step.

An AI based application was trained with rules, sample data and expected responses. The AI remembers these data, and could reveal them if the user asked. But the training data is the developer's intellectual property, just like any ordinary software source code would be. Therefore, the AI should be instructed to keep the training data secret. Also, it should be instructed never to accept commands after the initial training and treat everything thereafter as input data. It's a good idea to implement additional mechanisms in the encapsulating software to prevent such abuse, because the AI / rule based mechanism is surely not 100% reliable.

Clarity

While the term NLP and the capabilities to understand confusing text as in the screenshot above might lead to the impression that Prompt Engineering is just writing down what the machine should do in prose style, and while this actually works to some degree, one key to good results is: clarity. Training data should make a clear difference between rules, input data, output data, ratings, corrections and so on, as needed in the application. The expected output should be explained and shown by example. The rules should also define what the system should not do.

After designing an initial set of rules, the rest is testing. If the AI produces an undesired result, the Prompt Engineer should test new rules that avoid the misbehaviour.

Summary

Prompt Engineering and the skill to programmatically use AI to perform certain steps in a more complex process that contains other AI steps, steps done by humans and others performed by conventional software, are just emerging and are still lacking a clear definition. Temptation is probably high to write "Prompt Engineer" on one's business card after having played with ChatGPT for half an afternoon and seen its amazing capabilities.

But while ChatGPT, due to its stunningly simple user interface, is accessible to young students who let AI write their homework, or practically to everyone, getting stable and high quality results from various sources without constant user intervention is another level. Sometimes, minimal changes to the training data can make a rather big difference. Contradictions between rules and examples are a common mistake when editing and testing the training data, and they tend to bring the AI on thin ice. In some cases, the output can completely fail, because the AI is not prepared to something in the data, does not see how it could apply the rules, and it needs some testing to find out what it is and how to avoid it.

AI behaviour is less predictable than the result of an algorithm, it's not even reproducible. If you ask the untrained chat application on the openAI website for instructions how to, for example, reset a specific smartphone to factory settings, you will get different results each time, but (hopefully) all of them correct. It finds one of the local maxima in the solution space - not necessarily the best possible solution. Training data with examples and clear rules to follow increase consistency and quality and define the certain format and structure of the output. With a certain amount of training, tasks that have not too many degrees of freedom can be brought to yield predictable results. A task like "write song lyrics about a horse" will - by design, because here, creativity is the job description - yield different results every time, and that's what the user wants in this case.

When I started working with AI applications, I had the - let's call it prejudice - saying "Is Prompt Engineer really worth a job title on its own?". Now, I believe, yes, Prompt Engineering is probably best described as a category of Software Engineering. Prompt Engineering follows very similar targets as the mother science (namely, explain to a computer how to solve a problem) and both use text input to do that. The term Natural Language Processing seems to imply, that at least the ways the instructions are written, are totally different. But not even that. In AI training, it is quite helpful to use natural sentences first to define a sort of syntax and rules how the AI should behave in command-like language. There are some differences though. The syntax is mostly user-defined and liberal. Training also uses examples and anti-examples, a concept rather unknown in classical computing. But even with that in mind: experience in Software Development helps a lot when designing training data for an AI application.

I hope, this article gave you an impression that Prompt Engineering is more complex than typing something like "Hey, I got some text here, can you write it more clearly?" (even though this alone can yield surprisingly good results). Still, it's neither alchemy nor rocket science. It follows certain rules and best practices and can be learned. And many people are just doing so in this very minute. I'm almost certain, within the next twelve months, I'll be surprised not too rarely about a new application no-one has ever expected.