| Version 5 (modified by , 3 years ago) ( diff ) |

|---|

Machine talk

A white paper about software development in the AI age

Introduction

Software Development, the skill, as we knew it so far is the ability to understand a problem, find a solution concept for it and break it down into instructions for a computer that bring the computer to solve the problem. Describing the problem to the computer didn't help anything, nor giving the computer a few examples how similar problems were solved and ask it to find an adapted solution.

Scientists have been reseaching for decades, and (quite invisible to the public) many AI-based solutions for specific problem classes were developed. For example, AI-based support in evaluation of medical scan data, finding items on a conveyor belt that don't meet the quality standards or simple chatbots that basically repeat what's in the FAQ if they find a certain keyword in what the user typed.

We're beyond that now, AI services and software products available now for Natural Language Processing (NLP), image creation or interpretation, speech-to-text or more specialized tasks like creation of music have reached a level where they can be universally applied to virtually all kinds of tasks within their functionality. So, while chatbots from two years ago can be compared to software specialized in removing red eyes from photographs, today's AI performance allows bots that can rather be compared with a universal image processing software such as GIMP or Adobe PhotoShop.

Such AI services, the most prominent of them is probably the NLP AI ChatGPT (where GPT stands for Generative Pre-trained Transformer) can not only be used through the web interface, but basically the same functionality is exposed over an Application Programming Interface (API), allowing developers to integrate AI reasoning into their own software products. I've written a white paper recently, Chat for one, about some the various things I did with ChatGPT in the past months. This paper is about some of the experiences when using the programming interface.

So how does the availability of AI change the way software is written?

You've probably heard the term Prompt Engineering and perhaps you've read articles that Silicon Valley is craving for Prompt Engineers and offers them salaries in the mid-six-digit US$ range. I don't know whether the latter is true, but I think a Prompt Engineer deserves about the same salary as a Software Developer or Architect, depending on the exact job description. Prompt Engineering is not magic or some sort of enhanced empathy with machines, it is a form of Software Development. What Prompt Engineers do is: they write instructions for computers to solve problems. That's about valid for the daily business of traditional developers.

The difference is rather in the way the machine is instructed.

Concepts of classical programming languages differ, and there are some exotic ones like Prolog that move away from the common pattern, but basically, a classical program consists of small elements (commands or instructions) that step by step do small operations on the data that bring it closer to a problem solution.

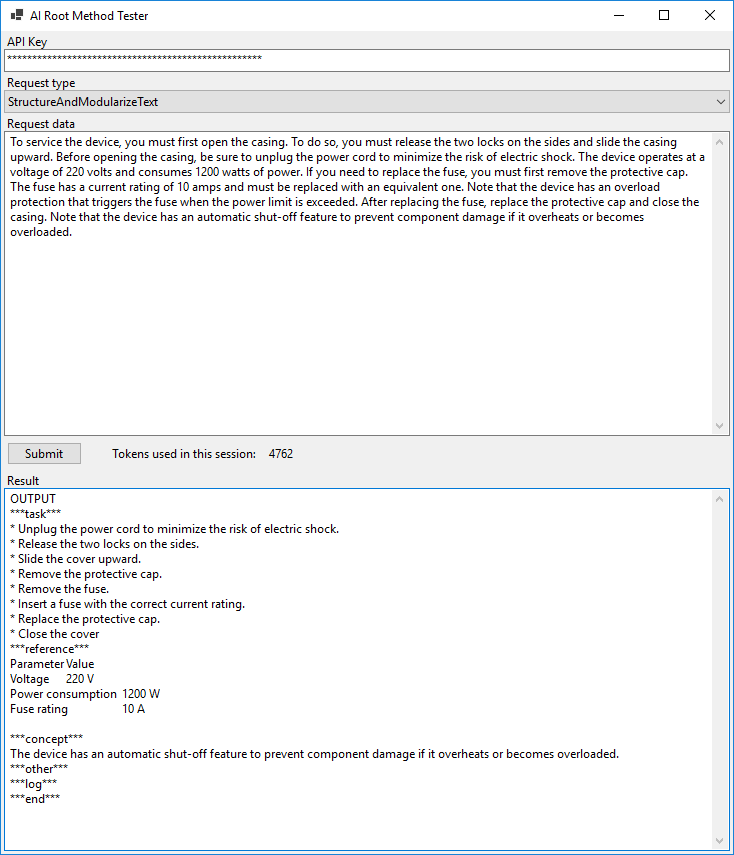

AIs are not instructed this way. An NLP AI brings along the ability to "understand" the text (or better: to behave as if it did). Therefore, we do not have to write lengthy software code to tell it how to (for example) find all the nouns in an input text in all possible languages and replace them with the correct terms. We must just provide the correct terms and how to find the wrong terms. A good way to teach such things are by a combination of instructions and examples, and also examples how not to do it. The interesting thing is: if we teach an AI things based on an English example, and if the same thing makes sense in German as well, the AI is likely to be able to do, in spite it's never been trained with German text. The AI context in this screenshot has mainly been trained with German data, and received just a short example in English:

So, the AI can do all the basic lingual processing and also powerful reasoning, we must just provide data it doesn't have by default and information on what we expect it to do with the user's input data.

In ChatGPT, training data can be uploaded as a JSON file, which can be understood as the "AI program". The development process itself is, again, closer to the traditional way - you change the training data, test, implement improvements and test again.

Modular functionality

Just like in classical, algorithmic development, it is not a good design having all steps of data manipulation covered in one huge training data set. The tests showed that tasks should rather be broken down into smaller, clearly defined steps with one optimization goal (like, for example, bringing confused instructions into the correct order. These modular functions can then be glued together by a regular program that passes the data from module to module and perhaps does some algorith-based processing in between. This also helps making the AI operations more reusable.