AI briefing

The classical way of bringing Large Language Models (LLMs) to reliably perform certain tasks is training. To train a LLM, typically, a large number of inputs and expected outputs of the AI are compiled to a data set. In a training run, the data is integrated into the AI's "knowledge" by computing weights based on the new data. This step requires very significant computational power of high-end GPUs with sufficient RAM.

Just to give an impression of the dimensions, realistic hardware for training could be a server with 192 GB of RAM and 5 to 10 NVIDIA A100 boards with 80 GB Video RAM each, and each costing around $15,000. Such servers can be rented as dedicated machines for significant monthly fees, but since training is only performed sometimes and takes a few hours to days, it's more adequate to rent cloud hardware for this purpose when it's needed and use smaller, cheaper hardware for inference.

While renting such a server for a training sounds more expensive than it actually is (taking into account the short time it's needed), typically in the hundreds of US$, the more expensive aspect is gathering and preparing the training data.

But is this really the way users have experienced LLMs since ChatGPT was released in November 2022? Who has actually trained an AI to achieve their goals?

Towards AGI

AI solutions that were (undiscovered by the large public) in common use before ChatGPT appeared were trained to a certain problem class - like optimizing the pictures taken by a smartphone camera or machine translation. A newer example is GitHub Copilot, a version of GPT trained with large volumes of software source code in a variety of languages to plug into IDEs and assist software developers with code suggestions while typing. These are often quite useful (not necesserily brilliant, but the AI anticipates a lot of copy/paste or other routine work and can save a lot of typing). This is an example, where nowadays, it makes sense to train an AI with specific data. Depending on the type of operation expected from the AI, the underlying model doesn't necessarily have to be one of the cutting-edge models with significant hardware requirements for inference. If lingual requirements are low, for example for mapping one XML structure to another one, maintaining the data, a relatively "dumb" (low token number training) model can be used and enabled to do its job by a large volume of examples.

On the other hand, ChatGPT or other large models with a broad general knowledge (the Open Source model Bloom, for example), can be used for a variety of tasks without any specific training. The user just provides instructions and the data to be used with the instructions in natural language. The user can also provide examples. The AI model can "understand" all these and create a useful response (hopefully, without any hallucinated pseudo-information).

A hypothetical model that can understand and answer in natural language with a broad, general knowledge and a capability of (simulated) reasoning, all at least on the level of a human being with average intelligence and education is called an AGI (Artificial General Intelligence). A user could discuss with an AGI just as with a human chat partner on the other end.

AI models available outside of laboratories are not yet there, but it feels like they are getting closer rapidly. And I'm not quite sure what happens inside the labs.

But how do users interact with these systems to perform a specific task? Let's look at two examples.

Using chat-based LLMs

Example 1: Text generation

Let's say you want to use ChatGPT to assist you in writing a text promoting a new product for social media. You know the product, want to point out some unique features and have an idea of the target group and the language to address them in. So you probably start out by giving the chat an outline of what this is about. Next, you will perhaps give it a few examples on how to write the text - perhaps from previous, similar campaigns. You will give it further instructions about the expected length and other details, and more facts to be used as the basis for the text.

So if we take this apart, we have three categories:

- Instructions

- Examples

- Facts

Finally, you ask ChatGPT to generate the text, iteratively give it some more instructions to improve the result and when it's good enough use it for your intended purpose.

Example 2: Structuring unstructured text

We did a number of tests with using LLM for standardized text operations. One example is to provide a confusing text like this one:

We have a new variant of the MBZ1xxx series, the MBZ1403. The main difference is its axial fan which performs a lot better than the radial one we still use in the MBZ1000 models. To servcie the device, you must first open the casing. To do so, you must reliese the two locks on the sides and slide the casing upward. Oh BTW, need less to say to unplug the power cord before you open the box. The new variant operates at a voltage of 220 volts and consumes 1400 watts of power. To replace the fuse (10 amps, not 8 amps like for MBZ1000), first remove the protective cap. Make sure to use the correct rating. The device has an overload protection that triggers the fuse before it overheats. After replacing the fuse, close the casing (but don't forget to replace the protective cap before that).

And get this result (to be further refined in subsequent processing steps):

OUTPUT ***task*** * Release the two locks on the sides. * Slide the cover upward. * Unplug the power cord to reduce the risk of electric shock. WARNING Danger of death by electric shock. * Remove the protective cap. * Remove the fuse. * Insert a 10A fuse. * Replace the protective cap. * Close the cover. ***reference*** Device version Voltage Power consumption Fuse rating Fan type MBZ1000 220V 1000W 8A Radial MBZ1403 220V 1400W 10A Axial ***concept*** The axial fan of the MBZ1403 performs much better than the radial fan used in the MBZ1000 model. ***other*** ***metadata*** CAT TechDoc AUDIENCE Tech TITLE MBZ1403 Device Servicing Instructions ***log*** ***end***

Yes, this is actually the result received from ChatGPT 3.5 API with a single call.

How does this work?

The secret is, we do not only pass the actual text "We have a new variant…" to the LLM, but we prefix it with what we call a briefing. LLM APIs allow to not only pass one message and expect a response, but an entire dialog between human and AI can be passed, that the AI then continues with its response. This is how chat works, the latest history of the chat is passed again to the machine, together with the new user message.

We use this mechanism to prefix the user input with a preconfigured dialog containing very condensed training data, consisting of instructions, examples and facts. We also define an input and output syntax in that. This prefix is stored as a file and automatically sent to the model when a user passes data, and chooses the specific operation.

It's a small fraction of the data normally used for training, but if the operation is not too complex in its details, it works quite well and fits conveniently into the 4k token limit of many models. It works due to the much better text understanding and reasoning capabilities of today's models, few examples are sufficient to learn the relevant patterns.

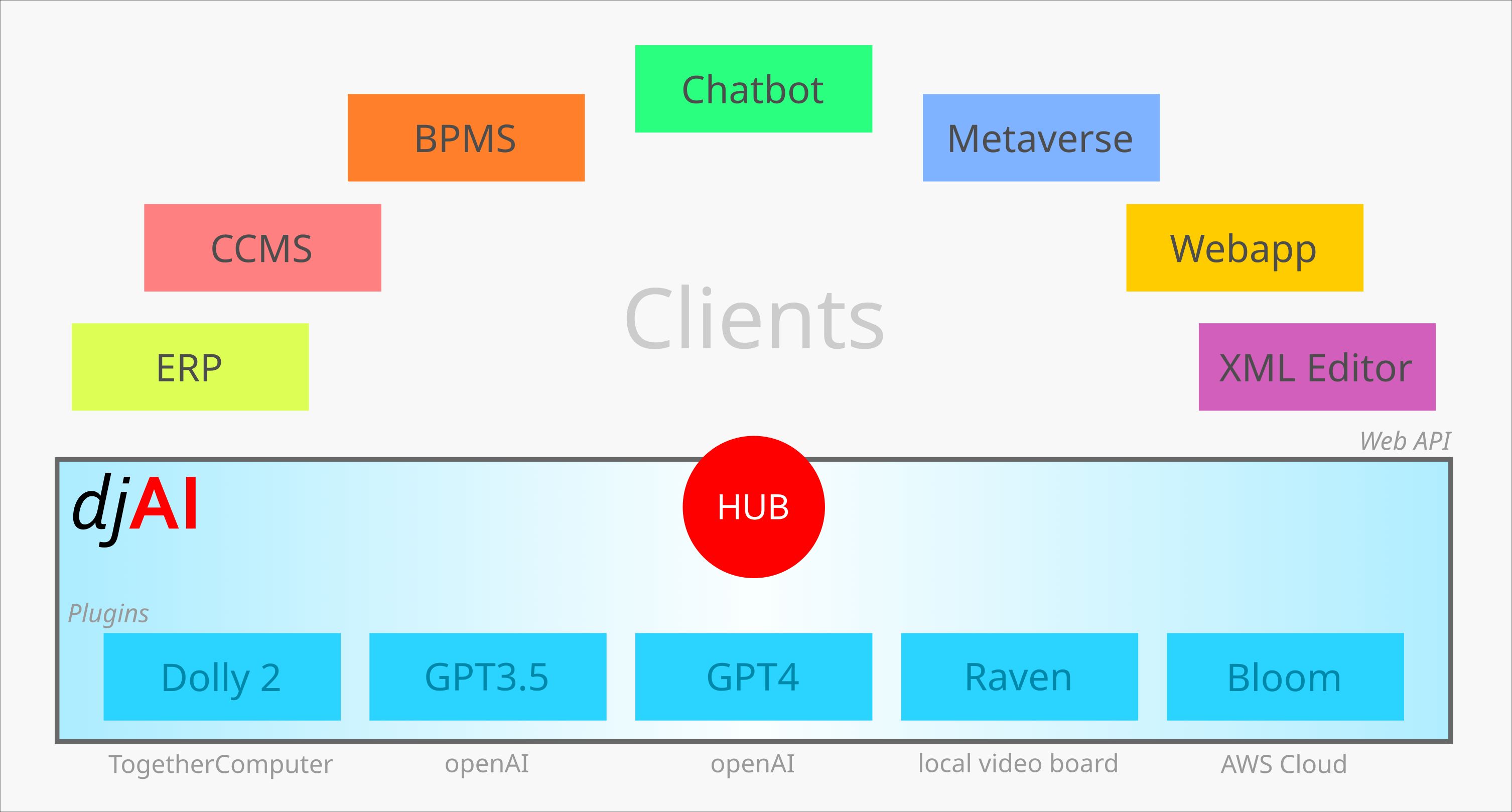

To make this easily usable in all types of applications, we've written a web based API called djAI to provide such a system with briefing capabilities.

djAI can hold plugins for different models and organize briefing data for them, both for chat and one-shot applications. From the calling application, only the input text and the name of an operation (like "structure confusing text") is passed, and djAI returns the response. The calling application does not need to know which AI model and briefing data is behind, nor can users even see the briefing.

Since the calls are so simple to integrate (anything able to send an http request can use it), it's also easy to compose single LLM actions to more complex operations. We have a demo based on this technology summarizing the above text and then create a valid DITA map out of it.

Some things we're still working on

The software is a great help already, but there are still some gaps to fill:

- Chat is not yet implemented. It basically works like single-step operations, but it must maintain the history in a user context and re-inject it to the AI.

- Currently, briefing data must be provided in the model's native format, for example, a certain JSON structure for ChatGPT 3.5. We will provide an automatic conversion from DITA (with certain conventions) into the model's native format. DITA can then be edited with an XML editor or a specific briefing data editor.

- Optional integration with Cinnamon or other CMS / DMS systems would enable running the briefing development from there, including versioning and lifecycles on the briefings.

- Permission and logging system to restrict use of certain functions and keep track of API cost.

- … and with the modular approach, we can chain generative steps with others that give a quality rating and instructions to improve. These results would then be fed back to the previous step with its original input.

Conclusion

The approach has many advantages:

- It hides briefings from users who just want a result.

- It hides software details from briefing designers.

- It hides AI models API secrets from the users, they use local secrets instead.

- It standardizes briefings, users just pass data.

- It is flexible - writing a briefing is done at a small fraction of the time and cost of training.

- It can use various models, each for its area where it performs best.

I also believe that this approach becomes more and more important and remove the need for training in most cases:

- Today's leading LLMs have impressive logical and linguistic capabilities (including software code) and are "smart enough" to learn new tasks from a small briefing.

- Upcoming models will have better reasoning and make more out of the same briefing data.

- Upcoming models allow larger token lengths, and thus, more briefing data (for example, GPT 3.5 was limited to 4k tokens, whereas GPT 4 has an option of 32k tokens, or eight times the text length).

- Software solutions like djAI allow breaking down tasks into small steps, making them more accessible to the briefing approach.

What do you think? Please let me know about your thoughts.